Part I.

Background:

I am currently working on a project which involves a

Shopify online web store, and the

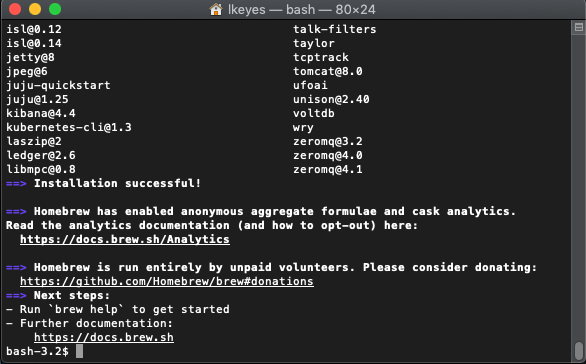

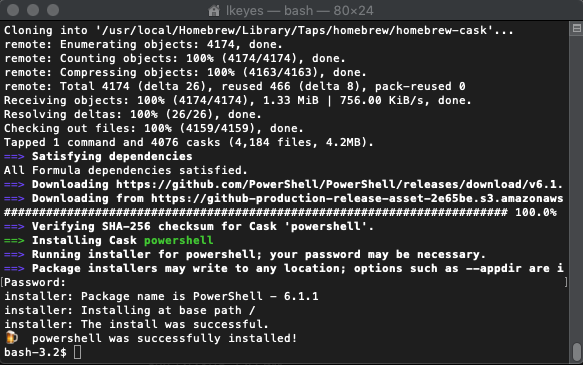

Brightpearl Inventory and CRM system. Both of these cloud-based systems have an Application Programmer’s Interface, (API) which provide a programmatic way to query and manipulate the data that has been entered via the normal web interface. They use these APIs to talk to each other and make them available to programmers who want to create custom functionality or plugins for the systems. Communication with these APIs can be done using a REST compatible client written in PHP, Python, Ruby on Rails, or a host of 3rd-generation languages like C# and Visual Basic.

REST stands for Representational State Transfer. This is the most recent flavor of network programming, similar to SOAP, XML, and XML-RPC, and even good old remote procedure calls.

Use-Case:

I’m looking into a way to extract data from the Brightpearl inventory system; I want to query for each day’s purchases and extract the order number, customer name and shipping information. I want to take this information and format it as an .DBF file for use by the UPS WorldShip program. Note that in this example, I’m interested in being a client of an existing web service, and, for the moment I really just need to query the service for existing data, I don’t need to add or delete records on the server.

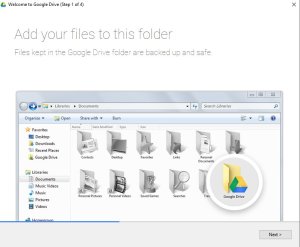

To start this odyssey, I’m using my Windows workstation. I’m thinking eventually if I need to have a web server for testing (to run PHP or RAILS for example), that I’ll spin that up as a virtual machine using VirtualBox on Windows with Ubuntu Server as my guest OS with a mySQL backend.

The

Brighpearl documentation suggests several tools that can be used to send requests to the API. Perverse as it sounds. I found it was helpful to install no less than three add-ons for FoxFire and Chrome to send the API requests, which enabled learning the mechanics of the process a little easier.

For Chrome:

For FireFox:

Each of these three add-ons allow you to send requests to a web server. Each is slightly different. The Chrome add-on includes a parser for JSON data, which is really helpful when you are working with JSON…which is the case with Brightpearl.

Brightpearl also suggests a book from O’Reilly called

RESTful Web Services by Leonard Richardson and Sam Ruby. The book was published in 2007, so although it has some useful information, it is somewhat dated. There is nothing about oAuth in it for example.

To get started with the Brightpearl API, you have to make sure that your user account is authorized to work with the API. This is done by accessing the “Staff” under Setup, and making sure that there is a green checkmark next to the user’s name in the API access column.

Get an Authorization Token

Brightpearl requires that you obtain an authorization token prior to accessing any other requests. The request for the authorization token takes the form of a POST request which includes your user name and password in the request payload. The URI of the payload includes two variables, your brightpearl server location, the name of your BrightPearl account and a Content-Type of text/xml

Content-Type: text/xml

where

use=”US East”

“microdesign”, is the name of your Brightpearl account id

The user name and password are passed as JSON name pairs to the apiAccountCredentials variable:

{

apiAccountCredentials:{

emailAddress:”myname@mydomain.com”,

password:”mypassword”

}

}

Note that the double quotes enclosing the eMail address and password are also present.

So, if you look at the raw request that is sent, the full request looks like this:

password:”mypassword” }

}

If the request is successful, you’ll receive a hexedicimal number back which is your authorization token.

{“response”:” xxxxxx-xxxxx-xxxxx-xxxxx-xxxx-xxxxxxxxxx”}

Once you have the authorization token, it is used in subsequent requests as a substitute for your user name and password. The token expires after about 30 minutes of inactivity…so you’ll have to issue another authorization request and obtain a new token after that time.

Once you have gotten the authorization token, you can start making requests. The basic request is a “resource search” which is a query of the Brightpearl data. Resource searches are issued with GET requests, and must include the API version number. The authorization code is sent as a header along with the request.

As a reminder, the authorization request is a POST, and the resource query is a GET.

This request returns a list of the current goods-out notes (Brightpearl’s nomenclature for a packing slip or pick-list).

Example with results:

The folllowing GET request shows the current orders.

brightpearl-auth: xxxxxx-xxxxx-xxxxx-xxxxx-xxxx-xxxxxxxxxx

This returns a list of current orders, in JSON format. The format shows the structure of the data first, and then the actual records. Note that there are only three orders!

{“response”:{“metaData”:{“resultsAvailable”:3,”resultsReturned”:3,”firstResult”:1,”lastResult”:3,”columns”:[{“name”:”orderId”,”sortable”:true,”filterable”:true,”reportDataType”:”IDSET”,”required”:false},{“name”:”orderTypeId”,”sortable”:true,”filterable”:true,”reportDataType”:”INTEGER”,”referenceData”:[“orderTypeNames”],”required”:false},{“name”:”contactId”,”sortable”:true,”filterable”:true,”reportDataType”:”INTEGER”,”required”:false},{“name”:”orderStatusId”,”sortable”:true,”filterable”:true,”reportDataType”:”INTEGER”,”referenceData”:[“orderStatusNames”],”required”:false},{“name”:”orderStockStatusId”,”sortable”:true,”filterable”:true,”reportDataType”:”INTEGER”,”referenceData”:[“orderStockStatusNames”],”required”:false},{“name”:”createdOn”,”sortable”:true,”filterable”:true,”reportDataType”:”PERIOD”,”required”:false},{“name”:”createdById”,”sortable”:true,”filterable”:true,”reportDataType”:”INTEGER”,”required”:false},{“name”:”customerRef”,”sortable”:true,”filterable”:true,”reportDataType”:”STRING”,”required”:false},{“name”:”orderPaymentStatusId”,”sortable”:true,”filterable”:true,”reportDataType”:”INTEGER”,”referenceData”:[“orderPaymentStatusNames”],”required”:false}],”sorting”:[{“filterable”:{“name”:”orderId”,”sortable”:true,”filterable”:true,”reportDataType”:”IDSET”,”required”:false},”direction”:”ASC”}]},”results”:[[1,1,207,4,3,”2014-09-18T14:15:50.000-04:00″,4,”#1014″,2],[2,1,207,1,3,”2014-09-29T13:20:52.000-04:00″,4,”#1015″,2],[3,1,207,1,3,”2014-09-29T13:25:39.000-04:00″,4,”#1016″,2]]},”reference”:{“orderTypeNames”:{“1″:”SALES_ORDER”},”orderPaymentStatusNames”:{“2″:”PARTIALLY_PAID”},”orderStatusNames”:{“1″:”Draft / Quote”,”4″:”Invoiced”},”orderStockStatusNames”:{“3″:”All fulfilled”}}}

If you use the “Advanced REST Client Application For Chrome, it will decode the above so that it is readable:

{

response:

{

metaData:

{

resultsAvailable: 3

resultsReturned: 3

firstResult: 1

lastResult: 3

columns:

[

9]

0:

{

name: “orderId“

sortable: true

filterable: true

reportDataType: “IDSET“

required: false

}

–

1:

{

name: “orderTypeId“

sortable: true

filterable: true

reportDataType: “INTEGER“

referenceData:

[

1]

0: “orderTypeNames“

–

required: false

}

–

2:

{

name: “contactId“

sortable: true

filterable: true

reportDataType: “INTEGER“

required: false

}

–

3:

{

name: “orderStatusId“

sortable: true

filterable: true

reportDataType: “INTEGER“

referenceData:

[

1]

0: “orderStatusNames“

–

required: false

}

–

4:

{

name: “orderStockStatusId“

sortable: true

filterable: true

reportDataType: “INTEGER“

referenceData:

[

1]

0: “orderStockStatusNames“

–

required: false

}

–

5:

{

name: “createdOn“

sortable: true

filterable: true

reportDataType: “PERIOD“

required: false

}

–

6:

{

name: “createdById“

sortable: true

filterable: true

reportDataType: “INTEGER“

required: false

}

–

7:

{

name: “customerRef“

sortable: true

filterable: true

reportDataType: “STRING“

required: false

}

–

8:

{

name: “orderPaymentStatusId“

sortable: true

filterable: true

reportDataType: “INTEGER“

referenceData:

[

1]

0: “orderPaymentStatusNames“

–

required: false

}

–

–

sorting:

[

1]

0:

{

filterable:

{

name: “orderId“

sortable: true

filterable: true

reportDataType: “IDSET“

required: false

}

–

direction: “ASC“

}

–

–

}

–

results:

[

3]

0:

[

9]

0: 1

1: 1

2: 207

3: 4

4: 3

5: “2014-09-18T14:15:50.000-04:00“

6: 4

7: “#1014“

8: 2

–

1:

[

9]

0: 2

1: 1

2: 207

3: 1

4: 3

5: “2014-09-29T13:20:52.000-04:00“

6: 4

7: “#1015“

8: 2

–

2:

[

9]

0: 3

1: 1

2: 207

3: 1

4: 3

5: “2014-09-29T13:25:39.000-04:00“

6: 4

7: “#1016“

8: 2

–

–

}

–

reference:

{

orderTypeNames:

{

1: “SALES_ORDER“

}

–

orderPaymentStatusNames:

{

2: “PARTIALLY_PAID“

}

–

orderStatusNames:

{

1: “Draft / Quote“

4: “Invoiced“

}

–

orderStockStatusNames:

{

3: “All fulfilled“

}

–

}

–

}